RTPVS

RTPVS is a realtime visibility determination and scene management system for interactive 3D environments. It requires no offline precomputation, has a minimal memory footprint, and scales well to huge scenes. To reduce runtime overhead, scene data can be optionally pre-computed. Queries (such as occlusion) can be made at any point, or the system can be set to issue them automatically and return the results at every frame. RTPVS supports SIMD and multithreading, and is entirely CPU-based to avoid causing stalls by reading information from the GPU.

Occluder management

RTPVS is set up to accept an arbitrary number of static and dynamic objects and dedicated static occluder geometry. Based on their size and worth relative to the cost, objects may also be used as occluders. Static geometry is arranged into cells, and visible cells are rasterized into a depth buffer with a high-performance software renderer that makes extensive use of SSE. The software renderer does no fragment shading, and can renderer 80,000 polygons into a depth buffer in 5ms with a resolution of 1024x768, or in 2ms at a resolution of 640x480. Allocating multiple threads to the system could decrease the time required, but it won't make a significant difference unless rendering a very large number of polygons. By using the results of the previous frame, or by doing optional pre-processing of the scene (described later), the system can determine which cells are likely to provide occluders, drastically reducing the number of rendered polygons required. The results of this step can determine what physical cells are visible, and allows RTPVS to skip rendering a large portion of the scene. As a result, most scenes require less than 1 ms to completely process a frame with a single thread. The gains increase as the size of a map increases.The rasterizer renders spans that are generated by half-space planes with boundary conditions to tolerate meshes with imprecise vertices that cause holes severe enough to invalidate GPU-based HZB techniques (meshes exported from UnrealED are particularly nasty offenders). If multithreading is used, vertex transformation, bucket classification and rasterization are the three largest parallel stages in the rendering pipeline. Bucket classification determines which thread(s) can render the polygon depending on their assigned regions of the screen. The rasterizer uses guard bands for screen clipping and preventing threads from overwriting regions of the screen not assigned to them when dealing with polygons that span multiple buckets. Span computation and rasterization is vectorized to calculate a 4x4 block at once (16 pixels). Tile-based rasterization was found to be roughly 30% less efficient due to having to completely (SSE, masking instead of branching) compute partially filled tiles. The large amount of data that the software rasterizer processes within its time constraints makes it the most complex subsystem in RTPVS.

Using a formula that prioritizes objects as occluders based on the size of their screen-space projections, the nearest N objects can be used as occluders. Their entire mesh can be used as an occluder volume, or if it's non-trivial, RTPVS supports occluder generation for arbitrary solid meshes at load-time, based on voxelization and box expansion as described here. Object's meshes are voxelized, and a series of points are chosen to be the center points for cubes that expand as far as possible without consuming any non-solid voxels. Once the algorithm fails to compute any boxes larger than a threshold without starving, the set of boxes generated is converted to a triangle mesh, and internal faces are either clipped to external faces or removed if they are completely internal. This triangle mesh is then used as the occluder volume for the object. Some form of minimal convex hull generation would be able to approximate the edges of objects with greater accuracy, but generating the largest convex hull that contains no non-solid voxels takes far longer than expanding cubes. Overlapping regions of occluder volumes in screen-space can be used for occluder fusion, trivially rejecting occluders whose volumes would be obscured by the occluder volume of an object higher up in the prioritized list of occluders, allowing more candidates to be considered without exceeding N dynamic occluder volumes.

Dynamic visibility determination

Objects are arranged in a dynamic BVH that re-arranges itself when it becomes significantly unbalanced. The rasterizer divides the rendered z-buffer into a set of tiles and computes the maximum z for each tile. Bounding volumes for the nodes in the BVH are projected to screen-space, and volumes whose projections' nearest z are greater than the maximum z for tiles that they intersect in screen-space can be trivially rejected (standard hi-z test). Hi-z testing has a moderately high percentage of false positives, so for objects whose visibility must be tested with greater accuracy (such as light volumes), RTPVS supports occlusion culling with arbitrary triangle meshes, although simple primitives (AABB, conservative sphere)that can be represented with a few polygons are the most efficient type meshes. Objects in the system can be configured to automatically issue occlusion queries to determine their visibility.Although HZB and HOM techniques support GPU acceleration, they suffer from readback stalls, especially on a heavily loaded GPU. Unlike the method used in RTPVS, GPU-based occlusion queries also require a significant amount of time to complete. By default, RTPVS runs in a separate thread. Queries issued directly by the client are evaluated synchronously in the calling thread due to the high throughput of the system. The rasterizer efficiently handles tens of thousands of polygons per frame, so rasterizing 6 triangles per AABB (2 triangles for each of the AABB's 3 front-facing sides) for a large number of AABBs is trivial. Visibility information for the scene is maintained in two buffers, a current buffer and an active buffer, to allow queries to be processed immediately without requiring synchronization. The software rasterizer and visibility tests support multithreading, but this isn't recommended for complex clients, as the system is efficient enough to work effectively with a single dedicated thread, and the client could utilize other CPU cores for other unrelated purposes. Occlusion tests can be issued by the client application at any time, but this may result in a pipeline stall, and the client would have to wait to synchronize with RTPVS, albeit with a far lower penalty than reading back from the GPU. The most recent visibility results and scene management information can be read from the system at any time with no synchronization penalty.

Optional pre-computed information

RTPVS also supports a static optimization system that can provide conservative initial visibility information for a scene with static occluder geometry. Although the information provided would never be more accurate than the results from the realtime visibility determination system, it could be used on more primitive system that would not support the high-performance rasterizer and occlusion queries. The preprocessor accepts an arbitrary triangle mesh as input, and produces a BSP tree in which each node is a set of convex polygons. Each plane in the BSP is then pushed down the tree and clipped by the other planes it passes through. When it arrives at a node that has two leaves as children, it is clipped by the convex hull of each child leaf. If each leaf's convex hull touches the other and the polygon was not eliminated during clipping, the clipped polygon is marked as a portal connecting both leaves, and is then added to a list of portals. Connectivity information is generated based on portals and the leaves that they connected. For each leaf, a frustum with a 179 degree field-of-view is created at each portal, facing out of the convex hull. This frustum is recursively clipped by other leaves to which this leaf is connected, and by the portals that connect the leaves. Whenever the frustum is clipped by a portal, a temporary frustum is created on the other side of the portal in order to maintain the frustum's convex shape. The original frustum is restored after returning to the leaf prior to traversing the portal. Each leaf that the set of clipped frustums are able to visit is considered visible from the original leaf, based on the condition that the portal is in the camera's frustum at run-time, and that it intersects every portal in a list of screen-space projections of portals that are required to each a leaf. Visible portal traversal does not exhibit transitivity, which is why testing a portal against every portal in the path is necessary (i.e. If A can see B and B can see C, it doesn't mean that A can see C unless (A,B), (A,C) and (B,C) are intersecting pairs in screen-space).When used in conjunction with the runtime, RTPVS can use the set of visible leaves as the set of static occluder geometry, rather than relying on the previous frame's results. Although this subsystem is popular in non-realtime visibility determination systems that do not rely on manually placed portals to divide a scene into sectors, it is the least useful subsystem in RTPVS due to the significant overhead of pre-processing, numerical imprecision with non-trivial geometry, and the marginal advantage provided to the realtime system. Manually placing portals and anti-portals to divide a scene and provide occlusion requires human intervention, and doesn't consider occlusion provided by dynamic objects.

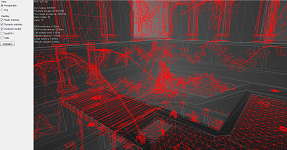

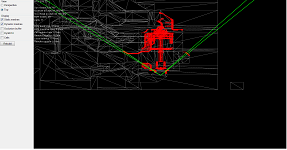

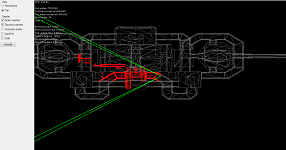

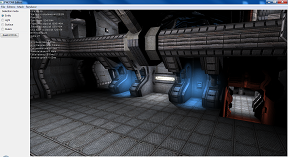

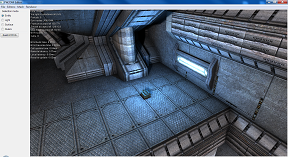

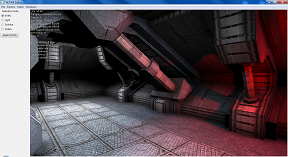

Screenshots

Click to enlarge.

Here are some screenshots of the system in use: